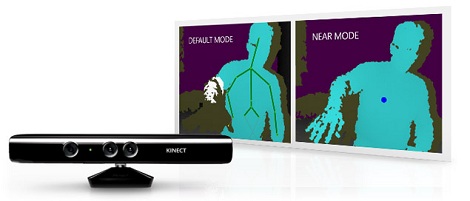

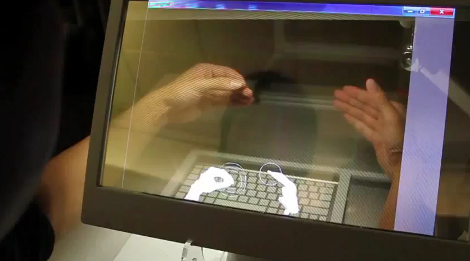

Upon the release of the Kinect, Microsoft showed off its golden child as the beginnings of a revolution in user interface technology. The skeleton and motion detection promised a futuristic, hand-waving “Minority Report-style” interface where your entire body controls a computer. The expectations haven’t exactly lived up reality, but [Steve], along with his coworkers at Amulet Devices have vastly improved the Kinect’s skeleton recognition so people can use a Kinect sitting down.

One huge drawback for using the Kinect for a Minority Report UI in a home theater is the fact that the Microsoft Skeleton recognition doesn’t work well when sitting down. Instead of relying on the built-in skeleton recognition that comes with the Kinect, [Steve] rolled his own skeleton detection using Harr classifiers.

Detecting Harr-like features has been used in many applications of computer vision technology; it’s a great, not-very-computationally-intensive way to detect faces and body positions with a simple camera. Training is required for the software, and [Steve]’s app spent several days programming itself. The results were worth it, though: the Kinect now recognizes [Steve] waving his arm while he is lying down on the couch.

Not to outdo himself, [Steve] also threw in voice recognition to his Kinect home theater controller; a fitting addition as his employer makes a voice recognition remote control. The recognition software seems to work very well, even with the wistful Scottish accent [Steve] has honed over a lifetime.

[Steve]’s employer is giving away their improved Kinect software that works for both the Xbox and Windows Kinects. If you’re ever going to do something with a Kinect that isn’t provided with the SDKs and APIs we covered earlier today, this will surely be an invaluable resource.

You can check out [Steve]’s demo of the new Kinect software after the break.

Recent Comments