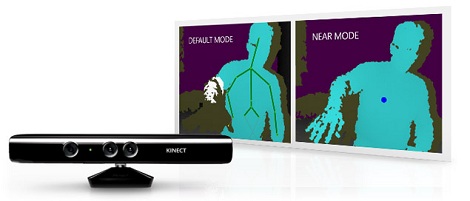

Here’s another virtual sandbox meets real sandbox project. A team at UC Davis is behind this depth-mapped and digitally projected sandbox environment. The physical sandbox uses fine-grained sand which serves nicely as a projection surface as well as a building medium. It includes a Kinect depth camera over head, and an offset digital projector to add the virtual layer. As you dig or build elevation in parts of the box, the depth camera changes the projected view to match in real-time. As you can see after the break, this starts with topographical data, but can also include enhancements like the water feature seen above.

It’s a big step forward in resolution compared to the project from which the team took inspiration. We have already seen this concept used as an interactive game. But we wonder about the potential of using this to quickly generate natural environments for digital gameplay. Just build up your topography in sand, jump into the video game and make sure it’s got the attributes you want, then start adding in trees and structures.

Don’t miss the video demo embedded after the break.

Recent Comments