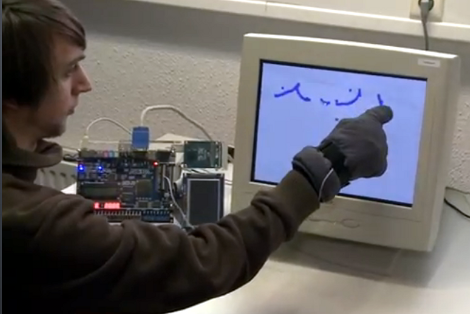

[Sprite_tm], a name many of you will recognize from these pages, has wasted no time in replicating the latest cool thing in a much simpler fashion. En Garde is a touch sensor that can detect up to 32 different points of contact on… whatever you use as the surface. He couldn’t sit idly by and let the Disney funded one from yesterday keep the spot light. As you can see in the video, it works pretty well. If he didn’t tell you that his can only detect up to 32 points as opposed to the 200 of the other, you probably wouldn’t even notice the difference. Of course, [Sprite_tm] also shares how you could easily beef his up to be even more precise. You can also download his source code an schematics from his site and give it a try yourself.

Recent Comments